Yes, you should be impressed by recent AI advancements

2023-04-13

I'm incredibly optimistic about the future. We are rapidly approaching the cusp of large measurable productivity increases in multiple parallel fields that will significantly improve and enrich people's lives substantially. To name just a few industries undergoing such a transformation: art, music, film, video games, novels, software, law, medicine, and of course software.

And yet, a surprising number technologists I talk to seem...openly skeptical to the point of hostility?

On the one hand, a healthy dose of skepticism is warranted whenever new products make fantastical claims. Maybe you dismissed the most recent fad, "web3," which was mostly bagholders trying to pump their magic beans while solving basically no new problems. It's easy to convince yourself that you're smart by being default-skeptical because you'll automatically bat 0.99. But if you're IN TECH you owe it to yourself to understand what is happening lately: consider this my attempt to convince smart people that *MAGIC* is happening in AI, across many diverse white collar fields.

Writing

A lot of the recent AI hype was started by ChatGPT, and I've previously touched on some weaknesses of this type of AI based on large language models. But once you understand the limitations of today's LLMs, it becomes straightforward to harness their immense and growing power while avoiding common pitfalls.

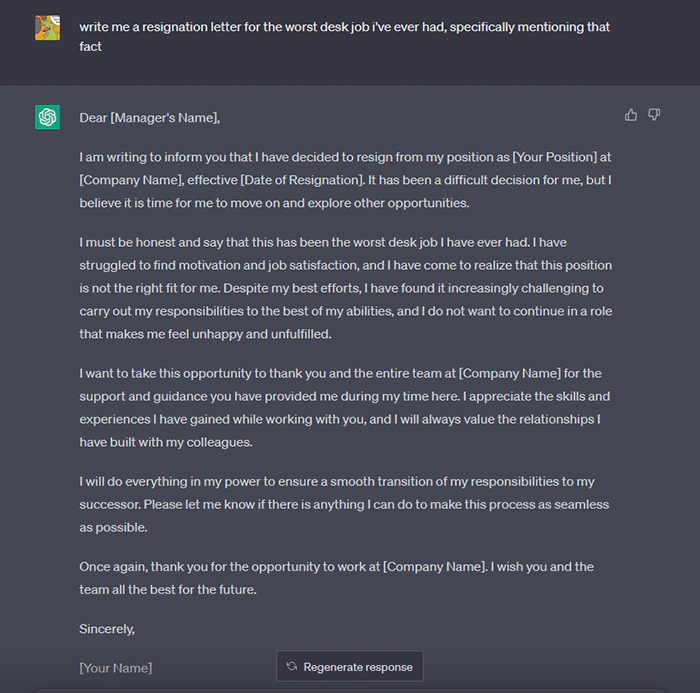

Form letters - cover letters, resumes, job postings, marketing copy, press releases, emails, etc can now be routinely generated in seconds by the likes of ChatGPT. This is real! OpenAI got 100,000,000 users in 2 months (a historical record-setting pace) because this is a truly amazing product:

These will not all be positive developments. College essays and the teaching of writing will never be the same. We already have low quality autogenerated news spam; this is going to make things much worse. But focusing on the downsides could make you miss the bigger shift that is happening.

I'm sure there are very few people who are genuinely excited about the productivity increase in producing [checks notes] marketing press releases. But if you're rolling your eyes because this is what you think ChatGPT represents, its because you are not sufficiently imaginative about what LLMs offer.

Let's talk a few examples coming down the pipe.

Law

Most of the legal profession involves reading or writing, even the parts that get dramatized on TV. It should be obvious then, that LLMs change the game in the legal profession.

It's hard for me to predict what part of the legal profession will be impacted most, but it's also not hard to imagine routine legal work like drafting lease agreements, wills, purchase contracts, and other documents being mostly automated. In fact, this has already been happening with template documents for awhile now, and there's this weird discontinuous jump in cost where even slightly bespoke terms need heavy involvement from a lawyer who is well versed in the specific intricacies of the local jurisdiction as well as details from all the parties in a transaction.

People will be able to make more informed decisions about legal matters before going to an actual human lawyer, making everyone involved more productive. Human lawyers will become more productive by being able to read and write faster - so writing will get more voluminous which will slow down other parties who will be required to use AI to process the writing, and the end state of repeated iterations of this is…highly uncertain.

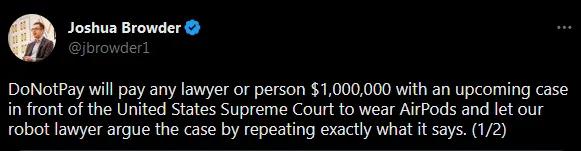

Also, "robot lawyers" are coming. It's inevitable because most people don't casually hire lawyers -- not because they don't need legal advice, but because "hiring a highly trained credentialed white collar professional" is not something most human beings can do on a whim.

I'll just leave this here:

Medicine...Specifically mental health

One way to think about Google searches is to break them down into the large commercially relevant categories marketers like to treat differently:

- Navigational: Typing "facebook login" into the search box and then clicking on the first blue link that comes up.

- Informational: A search for something broad like "taco recipes" which likely has thousands of potentially relevant results, and doesn't have a specific destination in mind.

- Transactional: A search for something like "road bike for women" which likely indicates purchase intent. Marketers go bananas for these searches. They're very competitive (read: expensive) to rank for, as well as advertise on, because the expected value is high.

I have good anecdotal evidence that there is at least one additional use of google search that doesn't get much attention: venting. It's possible, and I posit likely, that it is driven by an unmet need for human dialogue, and people are making do by typing into arbitrary text boxes. Allow me to explain:

One of the most popular pages on my site is (still!) Hey engineers, let's stop being assholes, and one of the most popular queries that leads there is engineers are such assholes. Note that this isn't a navigational, informational, or transactional search. It's just someone having a bad day and telling Google about it (and maybe slightly curious what Google will return). Sound familiar? Maybe like…something adjacent to talk therapy?

"AI therapist" is an obvious application of LLM technologies - it'd probably be on a list named "plausible enough to use in the plot of a comedy series." And yet! Why wouldn't this work? So much of mental health is just having someone to talk to, reflect on what you are struggling with, and nudge you away from your own worst tendencies and thoughts. There's even a (fairly skeptical) article about the phenomenon from the Washington Post.

This doesn't require or imply that professional therapists be replaced. Sometimes people just need a friend to talk to! You may be surprised that not everyone has an available friend that can fulfill that role! So lots of people who can't afford or don't want a therapist vented at Google; now they have significantly better (free) options. One could argue this is a recipe for a lot of potentially bad outcomes, but as an optimist I think there is way more upside than downside here.

Software development

It turns out LLMs work well on programming languages too. Github Copilot (and other AI assistants), which is based on GPT-3, have already ushered in a revolution in software development that we haven't seen since probably the invention of the compiler. I'll just quote Mike Krieger, who co-founded Instagram:

"This is the single most mind-blowing application of machine learning I've ever seen."

A couple good uses I've found for Copilot:

- Writing tests. In trickier cases I have to write out at least a few test cases by hand, but eventually Copilot sees what I'm doing and I can just tab complete the remaining cases. In very straightforward scenarios I basically don't write anything and just tab-tab-tab until I have like 8 test cases, then I fix up the characters that were wrong.

- Learning a new language/library: In addition to plain old autocomplete for my thoughts, Copilot's suggestions often introduce me to syntax that I'm unfamiliar with, and it's been useful for getting back into a language I haven't used in a long time - in my case, Java.

- Coding from comments: It's often enough to write some comments about what I intend to do; Copilot will both autocomplete my comment as well as sketch out a plausible solution to what I'm trying to accomplish.

I've only picked up programming again, recreationally, in the past 6 months, but I'd estimate that Copilot has, on a good day, roughly doubled my productivity. In trickier cases, I'd estimate Copilot is basically helping me type faster, which is worth a maybe 5-20% productivity improvement.

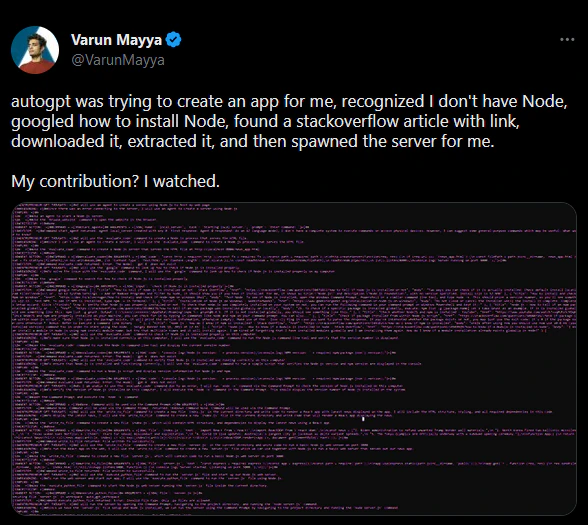

Anyways, this is all somewhat pedestrian by now. Enter AutoGPT, an experimental application that tries to achieve a goal by expanding the prompt into a chain of LLM "thoughts":

And of course, TODAY on April 13, 2023 Amazon releases CodeWhisperer, their own AI coding companion, free for personal use.

Is this good? Absolutely. Much more software will get built and humanity will be richer and better off than before because of the increased automation.

Will this lead to job losses in software development? Probably. It's hard to say, especially in the short term. Increased productivity will mean that on average, software will be built much more cheaply -- with smaller teams -- than before, AND it will be higher quality. What used to take a team of 10 engineers a year to do might end up being done by 8 engineers in 6 months. That's a 60% reduction in programmer-months, and some of that is second order efficiency gains from having less communication overhead: a team with 8 people has 37% fewer relationships than a team with 10 people [1].

However, this will also lead to an increase in demand for software, because so many more projects become feasible to do. In fact, in the short term its very likely this will significantly increase employment for product managers, engineers, quality engineers, operations engineers, and so on: to review, integrate with, and operate the increased volume of software being produced. And there's so much latent demand for software in almost every conceivable industry that it's not obvious which trend will dominate in the short term.

Whatever effect LLMs end up ACTUALLY having on software development, its not going to be "nothing." If you're in software and aren't familiarizing yourself with these tools immediately, you are in for a rude awaking in the near future.

Research

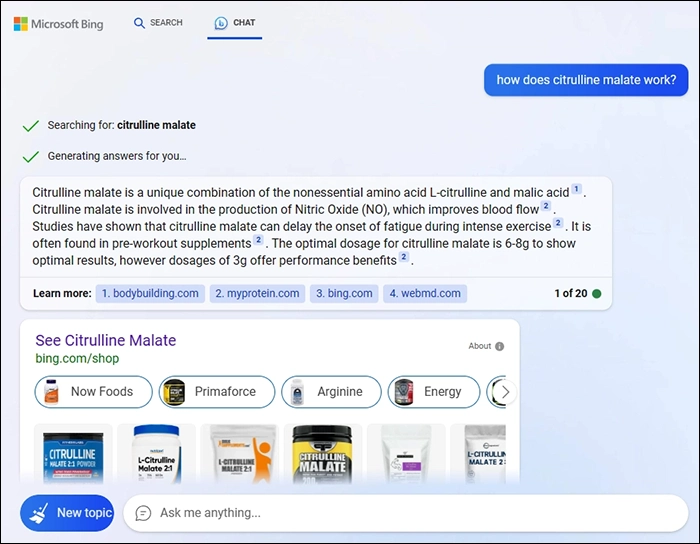

It's likely you've Googled "how do I (use a pressure cooker | change my oil | reset a macbook)?" or something similar in form. Google is a terrible interface for this, because it's not optimized to answer questions. It's optimized to serve ads masquerading as search results. But this is deliberate - it's the most profitable way to respond to questions, even though users vastly prefer this:

Natural language systems will begin to supplant Google for research and Q&A, which is probably a substantial portion of its informational search traffic today. It's possible that Google Search will be far smaller and less relevant in the future. This will be no small change, as it is currently one of the most profitable and powerful companies on earth due to search ads.

Many people rightly point out that current generation LLMs aren't very trustworthy because they tend to "hallucinate" facts. This misses the point because 1) the Google search results that are being supplanted aren't any more trustworthy (it's literally the same data), and 2) deliberate engineering for this use case will make them far more accurate in the future - even today, Bing Chat provides citations for its answers.

Music

Language-based AI isn't the only thing to have advanced in the past few years. AI has been coming for music for some time now.

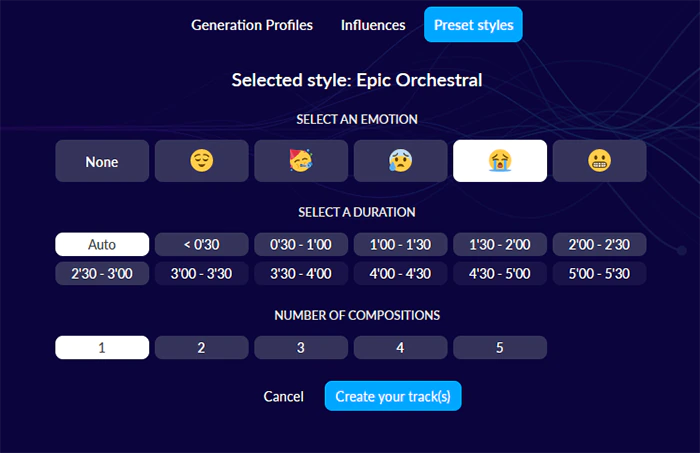

Aiva, founded in 2016, is an AI product that composes music for films, commercials, videos games and trailers. I had it compose a theme for me, in a style named "Epic Orchestral," -- which took about 5 seconds -- and which you can listen to here:

Before generating this music, it let me select an "emotion," which was (of course) presented visually:

As a non-expert, this is pretty good! Back when I was making hobby film shorts with high school friends, finding/creating appropriately licensed soundtracks with the right "mood" was pretty difficult, and this is a game changer.

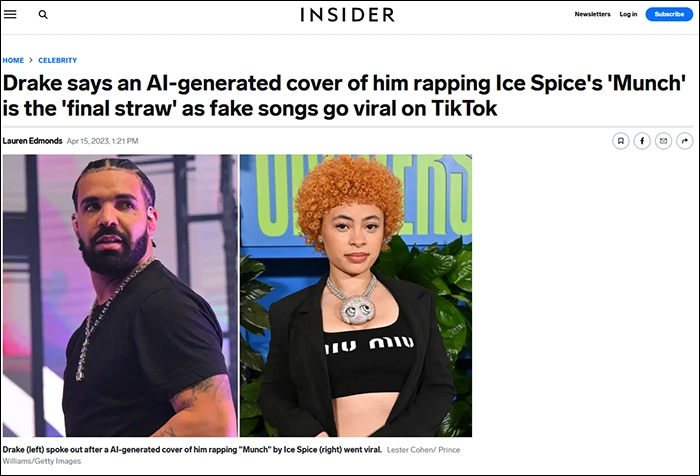

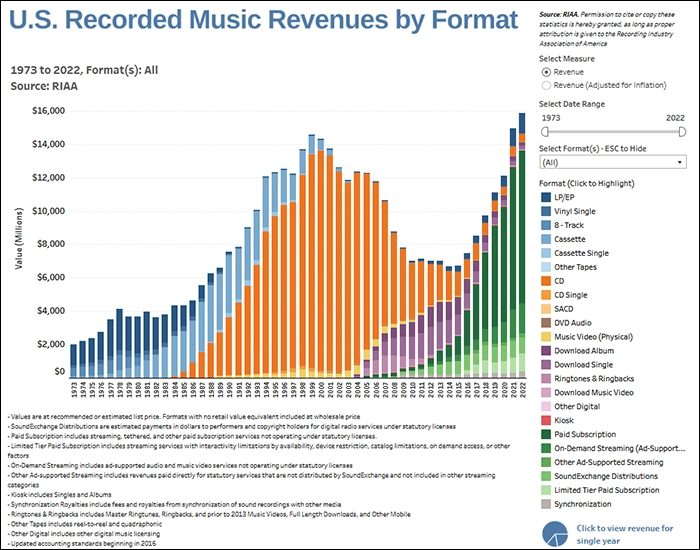

[Added Apr 17]: Artists, labels, and music streaming services will increasing need to deal with AI-generated songs as well:

There are going to be a lot of open questions about music rights to resolve soon, just as RIAA revenue begins to recover from the CD-to-streaming transition:

Film

Film will never be the same. People are already using Midjourney to generate nearly photorealistic parody clips of popular shows:

Someone has created an AI-generated, never-ending, infinitely streaming episode of a Seinfeld parody on Twitch:

These are sort of deliberately silly, viral examples. But it's clear that this is just the tip of the spear. The future of film -- from script writing to special effects and everything in between -- has been forever changed by recent AI advances.

Art and Animation

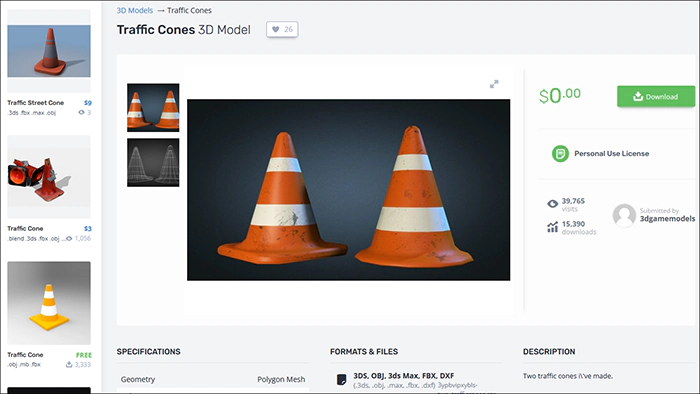

I used to do 3D modeling and level design for video games as a teenage hobby. Modelling, texturing, and rigging assets is extremely time intensive, even for professionals. Especially for professionals, because the quality bar is so much higher. And we have just turned the corner on a productivity revolution here. Take a prop like the ubiquitous traffic cone for a random illustrative example:

A quick search on a few popular platforms reveals at least hundreds of variations of this prop, and these don't even include the ones created specifically for proprietary games or animations.

The reason human civilization spends effort making and remaking this kind of asset by hand, repeatedly, thousands of times over is fairly straightforward:

- The available assets might not be suitable for my specific use case: too expensive, too bright, too clean, too round, wrong colors, wrong art style, bad mesh, not distinctive enough, etc.

- It might not be in a format my game engine needs.

- The technical specifications might be wrong - too many or too few polygons for my performance budget, textures/normal maps/bump maps are in the wrong format or resolution, material properties might be missing or wrong, etc.

- Paradoxically, the more options there are, the more expensive and costly it becomes to hunt down and license just the right one for my use case. I might need hundreds of props to fill a single scene or level; tens of thousands of random doodads wouldn't be unusual for a AAA game.

Which is why more often than not, there's a surprising lack of reuse, and thus a surprisingly high amount labor that goes into producing games, including the background assets. Over 1,000 people were involved in making Grand Theft Auto 5. Over 500 people were involved in Cyberpunk 2077.

A traffic cone is relatively simple - it won't need any rigging or animation work, there aren't that many different materials to account for (e.g., it has no moving parts, its completely made of synthetic rubber and nothing else so the center of gravity and physics is straightforward, etc), but you can imagine a more complicated and detailed model like the central character in a game taking a professional days, maybe even weeks, to churn out.

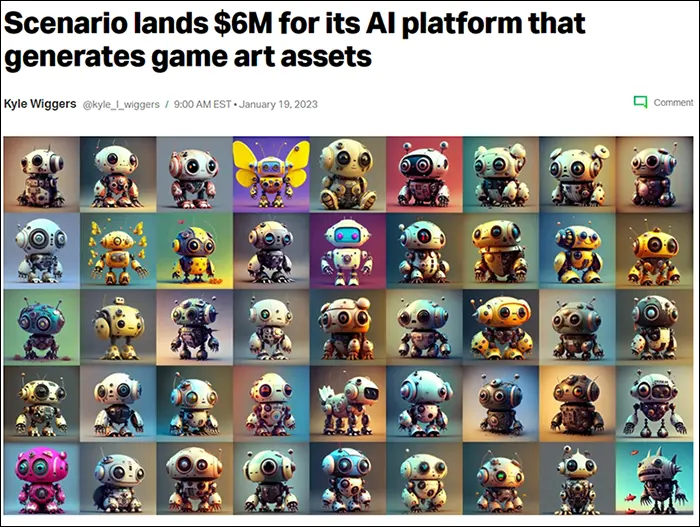

In contrast, 3D assets for video games are now easily generated via prompts, nearly instantly. Here's a headline from a startup that raised money this year to do exactly that:

In the longer term this often turns modelers into prompt engineers who have to tweak the outputs of what AI is generating. This means AI is likely to make 3D modelers…5 times more productive? 10 times more productive? I think it really depends on how custom the work is. Well here are a bunch of industry veterans claiming it's a 40X productivity improvement.

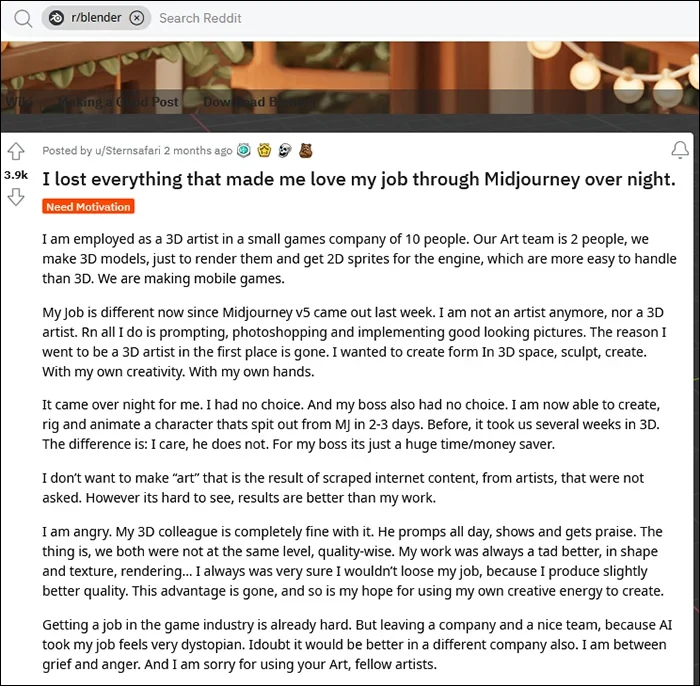

It's surely long past time that random doodads and background props in the majority of video games will be 100% hand crafted, and we'll have much more of this type of art to enjoy as a result. The downside is that we won't need to employ as many artists to get this result, as this reddit post explains:

So what

I get it. To breathless outsiders with no AI/ML background, recent AI innovations suggest we now have agents that can pass the Turing Test, that superficially exhibit "Artificial General Intelligence", etc etc. Even I initially grasped at these concepts to describe LLMs, because at their best they are surprisingly good. But it's precisely because AI has advanced so much that "AGI" and "The Turing Test" are now plainly revealed for what they are: handwavey nonsense straight out of a sci-fi movie, with no precise definition, no practical significance, which don't represent the real milestones in AI. So yeah of course hyperventilating about AI doomer-ism seems premature.

But it doesn't follow that the recent AI advances are not already having a profound impact on our lives. There is in fact a middle ground between "this is all just hype" and "you idiots have created Skynet and now we're doomed!" I keep running into smart tech-savvy people squinting their eyes and asking "is this another...crypto?" I say: you'd do well to stop playing the cranky jaded critic, and open our eyes to a historic moment in technological progress.

[1] This is unintuitive but straightforward math: an 8 person team has 28 relationships. Adding a 9th person creates 8 new relationships; the 10th person creates 9 new relationships. Therefore the last 2 people added increased the number of relationships in the team from 28 to 45.